Qwen-Image Edit のインストール手順を紹介します。

ComfyUIをインストールします。最新版である必要があります。

インストール手順はこちらの記事のPortable版のインストールを参照してください

Qwen-Image-Edit をインストールする手順を紹介します。

以下の HuggingFaceのHubから、Qwen-Image-Editのfp8のモデル qwen_image_edit_2509_fp8_e4m3fn.safetensors をダウンロードします。

以下の HuggingFaceのHubから、Qwen-Image-Editのfp8のモデル qwen_image_edit_fp8_e4m3fn.safetensors をダウンロードします。

ダウンロードしたモデルを次のディレクトリに配置します。

(ComfyUIの配置ディレクトリ)\models\diffusion_models\

または

(ComfyUIの配置ディレクトリ)\models\diffusion_models\qwen-image-edit\

以下の HuggingFaceのHubから、Qwen-Imageのテキストエンコーダー qwen_2.5_vl_7b_fp8_scaled.safetensors をダウンロードします。(Qwen-Imageと同じです)

ダウンロードしたモデルを次のディレクトリに配置します。

(ComfyUIの配置ディレクトリ)\models\text_encoders\

または

(ComfyUIの配置ディレクトリ)\models\text_encoders\qwen-image\

以下の HuggingFaceのHubから、Qwen-ImageのVAE qwen_image_vae.safetensors をダウンロードします。(Qwen-Imageと同じです)

ダウンロードしたモデルを次のディレクトリに配置します。

(ComfyUIの配置ディレクトリ)\models\vae\

または

(ComfyUIの配置ディレクトリ)\models\vae\qwen-image\

ファイルの配置は以上です。

ComfyUIを起動し、以下のワークフローを作成します。

[TextEncoderQwenImageEditPlus]のノードは以下のメニューにあります。

[ノードを追加] > [高度な機能] > [条件付け] > [TextEncoderQwenImageEditPlus]

[CFGNorm]のノードは以下のメニューにあります。

[ノードを追加] > [高度な機能] > [ガイダンス] > [CFGNorm]

ワークフローのJSONは以下です。

{

"id": "6a5f7751-1158-4b96-98bc-e6736ab017f2",

"revision": 0,

"last_node_id": 134,

"last_link_id": 269,

"nodes": [

{

"id": 121,

"type": "CLIPLoader",

"pos": [

-259.2861633300781,

204.64100646972656

],

"size": [

500,

110

],

"flags": {},

"order": 0,

"mode": 0,

"inputs": [],

"outputs": [

{

"name": "CLIP",

"type": "CLIP",

"links": [

262,

265

]

}

],

"properties": {

"cnr_id": "comfy-core",

"ver": "0.3.48",

"Node name for S&R": "CLIPLoader"

},

"widgets_values": [

"qwen-image\\qwen_2.5_vl_7b_fp8_scaled.safetensors",

"qwen_image",

"default"

]

},

{

"id": 5,

"type": "CLIPTextEncode",

"pos": [

329.2820129394531,

474.30780029296875

],

"size": [

500,

90

],

"flags": {

"collapsed": false

},

"order": 4,

"mode": 0,

"inputs": [

{

"name": "clip",

"type": "CLIP",

"link": 262

}

],

"outputs": [

{

"name": "CONDITIONING",

"type": "CONDITIONING",

"slot_index": 0,

"links": [

22

]

}

],

"title": "CLIP Text Encode (Negative)",

"properties": {

"cnr_id": "comfy-core",

"ver": "0.3.47",

"Node name for S&R": "CLIPTextEncode"

},

"widgets_values": [

""

]

},

{

"id": 21,

"type": "VAEDecode",

"pos": [

1342.4583740234375,

-68.53590393066406

],

"size": [

200,

60

],

"flags": {

"collapsed": false

},

"order": 12,

"mode": 0,

"inputs": [

{

"name": "samples",

"type": "LATENT",

"link": 115

},

{

"name": "vae",

"type": "VAE",

"link": 211

}

],

"outputs": [

{

"name": "IMAGE",

"type": "IMAGE",

"slot_index": 0,

"links": [

112

]

}

],

"properties": {

"cnr_id": "comfy-core",

"ver": "0.3.47",

"Node name for S&R": "VAEDecode"

},

"widgets_values": []

},

{

"id": 66,

"type": "SaveImage",

"pos": [

1589.38232421875,

-68.40391540527344

],

"size": [

518.892822265625,

429.1415100097656

],

"flags": {},

"order": 13,

"mode": 0,

"inputs": [

{

"name": "images",

"type": "IMAGE",

"link": 112

}

],

"outputs": [],

"properties": {

"cnr_id": "comfy-core",

"ver": "0.3.47",

"Node name for S&R": "SaveImage"

},

"widgets_values": [

"%date:yyyyMMdd_hhmmss%_t2i_Qwen-Image-fp8_test"

]

},

{

"id": 124,

"type": "EmptySD3LatentImage",

"pos": [

598.39599609375,

620.0835571289062

],

"size": [

330,

110

],

"flags": {},

"order": 10,

"mode": 0,

"inputs": [

{

"name": "width",

"type": "INT",

"widget": {

"name": "width"

},

"link": 259

},

{

"name": "height",

"type": "INT",

"widget": {

"name": "height"

},

"link": 260

},

{

"name": "batch_size",

"type": "INT",

"widget": {

"name": "batch_size"

},

"link": 261

}

],

"outputs": [

{

"name": "LATENT",

"type": "LATENT",

"links": [

250

]

}

],

"properties": {

"cnr_id": "comfy-core",

"ver": "0.3.48",

"Node name for S&R": "EmptySD3LatentImage"

},

"widgets_values": [

928,

1664,

1

]

},

{

"id": 128,

"type": "FluxKontextImageScale",

"pos": [

80.96746826171875,

630.9010620117188

],

"size": [

187.75448608398438,

26

],

"flags": {},

"order": 5,

"mode": 0,

"inputs": [

{

"name": "image",

"type": "IMAGE",

"link": 256

}

],

"outputs": [

{

"name": "IMAGE",

"type": "IMAGE",

"links": [

258,

266

]

}

],

"properties": {

"cnr_id": "comfy-core",

"ver": "0.3.51",

"Node name for S&R": "FluxKontextImageScale"

},

"widgets_values": []

},

{

"id": 131,

"type": "GetImageSize",

"pos": [

366.3339538574219,

629.4735107421875

],

"size": [

140,

124

],

"flags": {},

"order": 7,

"mode": 0,

"inputs": [

{

"name": "image",

"type": "IMAGE",

"link": 258

}

],

"outputs": [

{

"name": "width",

"type": "INT",

"links": [

259

]

},

{

"name": "height",

"type": "INT",

"links": [

260

]

},

{

"name": "batch_size",

"type": "INT",

"links": [

261

]

}

],

"properties": {

"cnr_id": "comfy-core",

"ver": "0.3.51",

"Node name for S&R": "GetImageSize"

},

"widgets_values": [

"width: 832, height: 1248\n batch size: 1"

]

},

{

"id": 126,

"type": "LoadImage",

"pos": [

-360.30859375,

628.9431762695312

],

"size": [

413.09088134765625,

406.1212463378906

],

"flags": {},

"order": 1,

"mode": 0,

"inputs": [],

"outputs": [

{

"name": "IMAGE",

"type": "IMAGE",

"links": [

256

]

},

{

"name": "MASK",

"type": "MASK",

"links": null

}

],

"properties": {

"cnr_id": "comfy-core",

"ver": "0.3.51",

"Node name for S&R": "LoadImage"

},

"widgets_values": [

"08.png",

"image"

]

},

{

"id": 16,

"type": "KSampler",

"pos": [

979.0615844726562,

58.50777816772461

],

"size": [

330,

270

],

"flags": {},

"order": 11,

"mode": 0,

"inputs": [

{

"name": "model",

"type": "MODEL",

"link": 269

},

{

"name": "positive",

"type": "CONDITIONING",

"link": 267

},

{

"name": "negative",

"type": "CONDITIONING",

"link": 22

},

{

"name": "latent_image",

"type": "LATENT",

"link": 250

}

],

"outputs": [

{

"name": "LATENT",

"type": "LATENT",

"slot_index": 0,

"links": [

115

]

}

],

"properties": {

"cnr_id": "comfy-core",

"ver": "0.3.47",

"Node name for S&R": "KSampler"

},

"widgets_values": [

741661333780048,

"randomize",

20,

2.5,

"euler",

"simple",

1

]

},

{

"id": 75,

"type": "VAELoader",

"pos": [

-259.2861633300781,

-55.35899353027344

],

"size": [

500,

70

],

"flags": {},

"order": 2,

"mode": 0,

"inputs": [],

"outputs": [

{

"name": "VAE",

"type": "VAE",

"slot_index": 0,

"links": [

211,

264

]

}

],

"properties": {

"cnr_id": "comfy-core",

"ver": "0.3.47",

"Node name for S&R": "VAELoader"

},

"widgets_values": [

"qwen-image\\qwen_image_vae.safetensors"

]

},

{

"id": 132,

"type": "TextEncodeQwenImageEditPlus",

"pos": [

338.8173828125,

184.61123657226562

],

"size": [

486.5150146484375,

219.96499633789062

],

"flags": {},

"order": 8,

"mode": 0,

"inputs": [

{

"name": "clip",

"type": "CLIP",

"link": 265

},

{

"name": "vae",

"shape": 7,

"type": "VAE",

"link": 264

},

{

"name": "image1",

"shape": 7,

"type": "IMAGE",

"link": 266

},

{

"name": "image2",

"shape": 7,

"type": "IMAGE",

"link": null

},

{

"name": "image3",

"shape": 7,

"type": "IMAGE",

"link": null

}

],

"outputs": [

{

"name": "CONDITIONING",

"type": "CONDITIONING",

"links": [

267

]

}

],

"properties": {

"cnr_id": "comfy-core",

"ver": "0.3.60",

"Node name for S&R": "TextEncodeQwenImageEditPlus"

},

"widgets_values": [

"sitting on stool.put feet forward,"

]

},

{

"id": 68,

"type": "UNETLoader",

"pos": [

-259.2861633300781,

64.64099884033203

],

"size": [

500,

90

],

"flags": {},

"order": 3,

"mode": 0,

"inputs": [],

"outputs": [

{

"name": "MODEL",

"type": "MODEL",

"slot_index": 0,

"links": [

246

]

}

],

"properties": {

"cnr_id": "comfy-core",

"ver": "0.3.47",

"Node name for S&R": "UNETLoader"

},

"widgets_values": [

"qwen-image-edit\\qwen_image_edit_2509_fp8_e4m3fn.safetensors",

"default"

]

},

{

"id": 122,

"type": "ModelSamplingAuraFlow",

"pos": [

348.7427062988281,

64.01853942871094

],

"size": [

250,

60

],

"flags": {},

"order": 6,

"mode": 0,

"inputs": [

{

"name": "model",

"type": "MODEL",

"link": 246

}

],

"outputs": [

{

"name": "MODEL",

"type": "MODEL",

"links": [

268

]

}

],

"properties": {

"cnr_id": "comfy-core",

"ver": "0.3.48",

"Node name for S&R": "ModelSamplingAuraFlow"

},

"widgets_values": [

3.5

]

},

{

"id": 134,

"type": "CFGNorm",

"pos": [

640.608642578125,

64.13288879394531

],

"size": [

270,

58

],

"flags": {},

"order": 9,

"mode": 0,

"inputs": [

{

"name": "model",

"type": "MODEL",

"link": 268

}

],

"outputs": [

{

"name": "patched_model",

"type": "MODEL",

"links": [

269

]

}

],

"properties": {

"cnr_id": "comfy-core",

"ver": "0.3.60",

"Node name for S&R": "CFGNorm"

},

"widgets_values": [

1

]

}

],

"links": [

[

22,

5,

0,

16,

2,

"CONDITIONING"

],

[

112,

21,

0,

66,

0,

"IMAGE"

],

[

115,

16,

0,

21,

0,

"LATENT"

],

[

211,

75,

0,

21,

1,

"VAE"

],

[

246,

68,

0,

122,

0,

"MODEL"

],

[

250,

124,

0,

16,

3,

"LATENT"

],

[

256,

126,

0,

128,

0,

"IMAGE"

],

[

258,

128,

0,

131,

0,

"IMAGE"

],

[

259,

131,

0,

124,

0,

"INT"

],

[

260,

131,

1,

124,

1,

"INT"

],

[

261,

131,

2,

124,

2,

"INT"

],

[

262,

121,

0,

5,

0,

"CLIP"

],

[

264,

75,

0,

132,

1,

"VAE"

],

[

265,

121,

0,

132,

0,

"CLIP"

],

[

266,

128,

0,

132,

2,

"IMAGE"

],

[

267,

132,

0,

16,

1,

"CONDITIONING"

],

[

268,

122,

0,

134,

0,

"MODEL"

],

[

269,

134,

0,

16,

0,

"MODEL"

]

],

"groups": [],

"config": {},

"extra": {

"ds": {

"scale": 0.6830134553650707,

"offset": [

412.60638590616935,

135.43195411893404

]

},

"frontendVersion": "1.26.13"

},

"version": 0.4

}

ComfyUIを起動し、以下のワークフローを作成します。

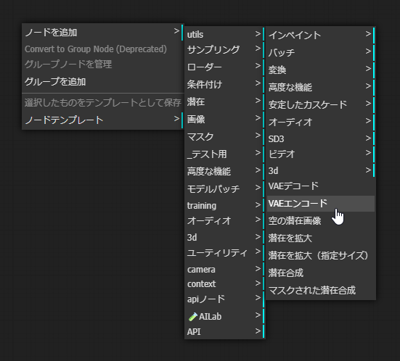

[画像を総ピクセル数にスケール]のノードは以下のメニューにあります。

[ノードを追加] > [画像] > [アップスケーリング] > [画像を総ピクセル数にスケール]

[VAEエンコード][VAEデコード]のノードは以下のメニューにあります。

[ノードを追加] > [潜在] > [VAEエンコード]

[ノードを追加] > [潜在] > [VAEデコード]

[TextEncoderQwenImageEdit]のノードは以下のメニューにあります。

[ノードを追加] > [高度な機能] > [条件付け] > [TextEncoderQwenImageEdit]

ワークフローのJSONは以下です。

{

"id": "6a5f7751-1158-4b96-98bc-e6736ab017f2",

"revision": 0,

"last_node_id": 134,

"last_link_id": 272,

"nodes": [

{

"id": 121,

"type": "CLIPLoader",

"pos": [

-259.2861633300781,

204.64100646972656

],

"size": [

500,

110

],

"flags": {},

"order": 0,

"mode": 0,

"inputs": [],

"outputs": [

{

"name": "CLIP",

"type": "CLIP",

"links": [

263,

264

]

}

],

"properties": {

"cnr_id": "comfy-core",

"ver": "0.3.48",

"Node name for S&R": "CLIPLoader"

},

"widgets_values": [

"qwen-image\\qwen_2.5_vl_7b_fp8_scaled.safetensors",

"qwen_image",

"default"

]

},

{

"id": 134,

"type": "VAEEncode",

"pos": [

586.6044311523438,

656.461181640625

],

"size": [

140,

46

],

"flags": {},

"order": 7,

"mode": 0,

"inputs": [

{

"name": "pixels",

"type": "IMAGE",

"link": 267

},

{

"name": "vae",

"type": "VAE",

"link": 272

}

],

"outputs": [

{

"name": "LATENT",

"type": "LATENT",

"links": [

268

]

}

],

"properties": {

"cnr_id": "comfy-core",

"ver": "0.3.51",

"Node name for S&R": "VAEEncode"

},

"widgets_values": []

},

{

"id": 132,

"type": "TextEncodeQwenImageEdit",

"pos": [

334.2413330078125,

462.22906494140625

],

"size": [

394.5230712890625,

130.1999969482422

],

"flags": {},

"order": 4,

"mode": 0,

"inputs": [

{

"name": "clip",

"type": "CLIP",

"link": 264

},

{

"name": "vae",

"shape": 7,

"type": "VAE",

"link": null

},

{

"name": "image",

"shape": 7,

"type": "IMAGE",

"link": null

}

],

"outputs": [

{

"name": "CONDITIONING",

"type": "CONDITIONING",

"links": [

271

]

}

],

"properties": {

"cnr_id": "comfy-core",

"ver": "0.3.51",

"Node name for S&R": "TextEncodeQwenImageEdit"

},

"widgets_values": [

""

]

},

{

"id": 68,

"type": "UNETLoader",

"pos": [

-259.2861633300781,

64.64099884033203

],

"size": [

500,

90

],

"flags": {},

"order": 1,

"mode": 0,

"inputs": [],

"outputs": [

{

"name": "MODEL",

"type": "MODEL",

"slot_index": 0,

"links": [

246

]

}

],

"properties": {

"cnr_id": "comfy-core",

"ver": "0.3.47",

"Node name for S&R": "UNETLoader"

},

"widgets_values": [

"qwen-image-edit\\qwen_image_edit_fp8_e4m3fn.safetensors",

"default"

]

},

{

"id": 75,

"type": "VAELoader",

"pos": [

-259.2861633300781,

-55.35899353027344

],

"size": [

500,

70

],

"flags": {},

"order": 2,

"mode": 0,

"inputs": [],

"outputs": [

{

"name": "VAE",

"type": "VAE",

"slot_index": 0,

"links": [

211,

254,

272

]

}

],

"properties": {

"cnr_id": "comfy-core",

"ver": "0.3.47",

"Node name for S&R": "VAELoader"

},

"widgets_values": [

"qwen-image\\qwen_image_vae.safetensors"

]

},

{

"id": 133,

"type": "ImageScaleToTotalPixels",

"pos": [

3.769843101501465,

658.942626953125

],

"size": [

281.8999938964844,

82

],

"flags": {},

"order": 6,

"mode": 0,

"inputs": [

{

"name": "image",

"type": "IMAGE",

"link": 266

}

],

"outputs": [

{

"name": "IMAGE",

"type": "IMAGE",

"links": [

267,

269

]

}

],

"properties": {

"cnr_id": "comfy-core",

"ver": "0.3.51",

"Node name for S&R": "ImageScaleToTotalPixels"

},

"widgets_values": [

"nearest-exact",

1

]

},

{

"id": 122,

"type": "ModelSamplingAuraFlow",

"pos": [

470.9951171875,

62.554443359375

],

"size": [

250,

60

],

"flags": {},

"order": 5,

"mode": 0,

"inputs": [

{

"name": "model",

"type": "MODEL",

"link": 246

}

],

"outputs": [

{

"name": "MODEL",

"type": "MODEL",

"links": [

247

]

}

],

"properties": {

"cnr_id": "comfy-core",

"ver": "0.3.48",

"Node name for S&R": "ModelSamplingAuraFlow"

},

"widgets_values": [

3.5

]

},

{

"id": 16,

"type": "KSampler",

"pos": [

824.5587158203125,

62.22126770019531

],

"size": [

330,

270

],

"flags": {},

"order": 9,

"mode": 0,

"inputs": [

{

"name": "model",

"type": "MODEL",

"link": 247

},

{

"name": "positive",

"type": "CONDITIONING",

"link": 270

},

{

"name": "negative",

"type": "CONDITIONING",

"link": 271

},

{

"name": "latent_image",

"type": "LATENT",

"link": 268

}

],

"outputs": [

{

"name": "LATENT",

"type": "LATENT",

"slot_index": 0,

"links": [

115

]

}

],

"properties": {

"cnr_id": "comfy-core",

"ver": "0.3.47",

"Node name for S&R": "KSampler"

},

"widgets_values": [

1040342518450219,

"randomize",

20,

4,

"euler",

"simple",

1

]

},

{

"id": 21,

"type": "VAEDecode",

"pos": [

1193.985595703125,

-71.69037628173828

],

"size": [

200,

60

],

"flags": {

"collapsed": false

},

"order": 10,

"mode": 0,

"inputs": [

{

"name": "samples",

"type": "LATENT",

"link": 115

},

{

"name": "vae",

"type": "VAE",

"link": 211

}

],

"outputs": [

{

"name": "IMAGE",

"type": "IMAGE",

"slot_index": 0,

"links": [

112

]

}

],

"properties": {

"cnr_id": "comfy-core",

"ver": "0.3.47",

"Node name for S&R": "VAEDecode"

},

"widgets_values": []

},

{

"id": 66,

"type": "SaveImage",

"pos": [

1427.2532958984375,

-70.22738647460938

],

"size": [

518.892822265625,

429.1415100097656

],

"flags": {},

"order": 11,

"mode": 0,

"inputs": [

{

"name": "images",

"type": "IMAGE",

"link": 112

}

],

"outputs": [],

"properties": {

"cnr_id": "comfy-core",

"ver": "0.3.47",

"Node name for S&R": "SaveImage"

},

"widgets_values": [

"%date:yyyyMMdd_hhmmss%_t2i_Qwen-Image-fp8_test"

]

},

{

"id": 127,

"type": "TextEncodeQwenImageEdit",

"pos": [

335.15234375,

211.72129821777344

],

"size": [

392.6794738769531,

200

],

"flags": {},

"order": 8,

"mode": 0,

"inputs": [

{

"name": "clip",

"type": "CLIP",

"link": 263

},

{

"name": "vae",

"shape": 7,

"type": "VAE",

"link": 254

},

{

"name": "image",

"shape": 7,

"type": "IMAGE",

"link": 269

}

],

"outputs": [

{

"name": "CONDITIONING",

"type": "CONDITIONING",

"links": [

270

]

}

],

"properties": {

"cnr_id": "comfy-core",

"ver": "0.3.51",

"Node name for S&R": "TextEncodeQwenImageEdit"

},

"widgets_values": [

"change from back image"

]

},

{

"id": 126,

"type": "LoadImage",

"pos": [

-469.4084777832031,

660.1057739257812

],

"size": [

413.09088134765625,

406.1212463378906

],

"flags": {},

"order": 3,

"mode": 0,

"inputs": [],

"outputs": [

{

"name": "IMAGE",

"type": "IMAGE",

"links": [

266

]

},

{

"name": "MASK",

"type": "MASK",

"links": null

}

],

"properties": {

"cnr_id": "comfy-core",

"ver": "0.3.51",

"Node name for S&R": "LoadImage"

},

"widgets_values": [

"example.png",

"image"

]

}

],

"links": [

[

112,

21,

0,

66,

0,

"IMAGE"

],

[

115,

16,

0,

21,

0,

"LATENT"

],

[

211,

75,

0,

21,

1,

"VAE"

],

[

246,

68,

0,

122,

0,

"MODEL"

],

[

247,

122,

0,

16,

0,

"MODEL"

],

[

254,

75,

0,

127,

1,

"VAE"

],

[

263,

121,

0,

127,

0,

"CLIP"

],

[

264,

121,

0,

132,

0,

"CLIP"

],

[

266,

126,

0,

133,

0,

"IMAGE"

],

[

267,

133,

0,

134,

0,

"IMAGE"

],

[

268,

134,

0,

16,

3,

"LATENT"

],

[

269,

133,

0,

127,

2,

"IMAGE"

],

[

270,

127,

0,

16,

1,

"CONDITIONING"

],

[

271,

132,

0,

16,

2,

"CONDITIONING"

],

[

272,

75,

0,

134,

1,

"VAE"

]

],

"groups": [],

"config": {},

"extra": {

"ds": {

"scale": 0.6209213230591552,

"offset": [

484.01674713948194,

195.91119463371578

]

},

"frontendVersion": "1.25.9"

},

"version": 0.4

}

Qwen-Image-Editを実行します。作成したワークフローの[画像を読み込む]ノードに入力画像を設定します。

[TextEncodeQwenImageEdit]ノードのプロンプトに以下を入力します。

[実行]ボタンをクリックして画像生成を実行します。VRAMは10GB程度利用されます。

下図の生成結果が表示されます。入力画像に対する後姿の画像が生成できました。

ComfyUIを起動し、以下のワークフローを作成します。

[TextEncodeQwenImageEdit]のノードは以下のメニューにあります。

[ノードを追加] > [高度な機能] > [条件付け] > [TextEncodeQwenImageEdit]

[FluxKontextImageScale]のノードは以下のメニューにあります。

[ノードを追加] > [高度な機能] > [条件付け] > [flux] > [FluxKontextImageScale]

[Get Image Size]のノードは以下のメニューにあります。

[ノードを追加] > [画像] > [Get Image Size]

[モデルサンプリングオーラフロー (Model Sampling Auraflow)]のノード、

[空のSD3潜在画像]のノードについては、Qwen-Imageの記事を参照してください。

ワークフローのJSONは以下です。

{

"id": "3a7e25d6-98b1-41e2-8b14-46d22e5c77e4",

"revision": 0,

"last_node_id": 131,

"last_link_id": 263,

"nodes": [

{

"id": 68,

"type": "UNETLoader",

"pos": [

-259.2861633300781,

64.64099884033203

],

"size": [

500,

90

],

"flags": {},

"order": 0,

"mode": 0,

"inputs": [],

"outputs": [

{

"name": "MODEL",

"type": "MODEL",

"slot_index": 0,

"links": [

246

]

}

],

"properties": {

"Node name for S&R": "UNETLoader",

"cnr_id": "comfy-core",

"ver": "0.3.47"

},

"widgets_values": [

"qwen-image-edit\\qwen_image_edit_fp8_e4m3fn.safetensors",

"default"

]

},

{

"id": 121,

"type": "CLIPLoader",

"pos": [

-259.2861633300781,

204.64100646972656

],

"size": [

500,

110

],

"flags": {},

"order": 1,

"mode": 0,

"inputs": [],

"outputs": [

{

"name": "CLIP",

"type": "CLIP",

"links": [

262,

263

]

}

],

"properties": {

"Node name for S&R": "CLIPLoader",

"cnr_id": "comfy-core",

"ver": "0.3.48"

},

"widgets_values": [

"qwen-image\\qwen_2.5_vl_7b_fp8_scaled.safetensors",

"qwen_image",

"default"

]

},

{

"id": 75,

"type": "VAELoader",

"pos": [

-259.2861633300781,

-55.35899353027344

],

"size": [

500,

70

],

"flags": {},

"order": 2,

"mode": 0,

"inputs": [],

"outputs": [

{

"name": "VAE",

"type": "VAE",

"slot_index": 0,

"links": [

211,

254

]

}

],

"properties": {

"Node name for S&R": "VAELoader",

"cnr_id": "comfy-core",

"ver": "0.3.47"

},

"widgets_values": [

"qwen-image\\qwen_image_vae.safetensors"

]

},

{

"id": 5,

"type": "CLIPTextEncode",

"pos": [

329.2820129394531,

474.30780029296875

],

"size": [

500,

90

],

"flags": {

"collapsed": false

},

"order": 5,

"mode": 0,

"inputs": [

{

"name": "clip",

"type": "CLIP",

"link": 262

}

],

"outputs": [

{

"name": "CONDITIONING",

"type": "CONDITIONING",

"slot_index": 0,

"links": [

22

]

}

],

"title": "CLIP Text Encode (Negative)",

"properties": {

"Node name for S&R": "CLIPTextEncode",

"cnr_id": "comfy-core",

"ver": "0.3.47"

},

"widgets_values": [

""

]

},

{

"id": 122,

"type": "ModelSamplingAuraFlow",

"pos": [

527.3628540039062,

62.554447174072266

],

"size": [

250,

60

],

"flags": {},

"order": 4,

"mode": 0,

"inputs": [

{

"name": "model",

"type": "MODEL",

"link": 246

}

],

"outputs": [

{

"name": "MODEL",

"type": "MODEL",

"links": [

247

]

}

],

"properties": {

"Node name for S&R": "ModelSamplingAuraFlow",

"cnr_id": "comfy-core",

"ver": "0.3.48"

},

"widgets_values": [

3.5

]

},

{

"id": 127,

"type": "TextEncodeQwenImageEdit",

"pos": [

335.15234375,

211.72129821777344

],

"size": [

490.774169921875,

200

],

"flags": {},

"order": 7,

"mode": 0,

"inputs": [

{

"name": "clip",

"type": "CLIP",

"link": 263

},

{

"name": "vae",

"shape": 7,

"type": "VAE",

"link": 254

},

{

"name": "image",

"shape": 7,

"type": "IMAGE",

"link": 257

}

],

"outputs": [

{

"name": "CONDITIONING",

"type": "CONDITIONING",

"links": [

253

]

}

],

"properties": {

"Node name for S&R": "TextEncodeQwenImageEdit"

},

"widgets_values": [

"change from back imaage"

]

},

{

"id": 16,

"type": "KSampler",

"pos": [

979.0615844726562,

58.50777816772461

],

"size": [

330,

270

],

"flags": {},

"order": 10,

"mode": 0,

"inputs": [

{

"name": "model",

"type": "MODEL",

"link": 247

},

{

"name": "positive",

"type": "CONDITIONING",

"link": 253

},

{

"name": "negative",

"type": "CONDITIONING",

"link": 22

},

{

"name": "latent_image",

"type": "LATENT",

"link": 250

}

],

"outputs": [

{

"name": "LATENT",

"type": "LATENT",

"slot_index": 0,

"links": [

115

]

}

],

"properties": {

"Node name for S&R": "KSampler",

"cnr_id": "comfy-core",

"ver": "0.3.47"

},

"widgets_values": [

46193712770199,

"randomize",

20,

4,

"euler",

"simple",

1

]

},

{

"id": 21,

"type": "VAEDecode",

"pos": [

1342.4583740234375,

-68.53590393066406

],

"size": [

200,

60

],

"flags": {

"collapsed": false

},

"order": 11,

"mode": 0,

"inputs": [

{

"name": "samples",

"type": "LATENT",

"link": 115

},

{

"name": "vae",

"type": "VAE",

"link": 211

}

],

"outputs": [

{

"name": "IMAGE",

"type": "IMAGE",

"slot_index": 0,

"links": [

112

]

}

],

"properties": {

"Node name for S&R": "VAEDecode",

"cnr_id": "comfy-core",

"ver": "0.3.47"

},

"widgets_values": []

},

{

"id": 66,

"type": "SaveImage",

"pos": [

1589.38232421875,

-68.40391540527344

],

"size": [

518.892822265625,

429.1415100097656

],

"flags": {},

"order": 12,

"mode": 0,

"inputs": [

{

"name": "images",

"type": "IMAGE",

"link": 112

}

],

"outputs": [],

"properties": {

"Node name for S&R": "SaveImage",

"cnr_id": "comfy-core",

"ver": "0.3.47"

},

"widgets_values": [

"%date:yyyyMMdd_hhmmss%_t2i_Qwen-Image-fp8_test"

]

},

{

"id": 124,

"type": "EmptySD3LatentImage",

"pos": [

598.39599609375,

620.0835571289062

],

"size": [

330,

110

],

"flags": {},

"order": 9,

"mode": 0,

"inputs": [

{

"name": "width",

"type": "INT",

"widget": {

"name": "width"

},

"link": 259

},

{

"name": "height",

"type": "INT",

"widget": {

"name": "height"

},

"link": 260

},

{

"name": "batch_size",

"type": "INT",

"widget": {

"name": "batch_size"

},

"link": 261

}

],

"outputs": [

{

"name": "LATENT",

"type": "LATENT",

"links": [

250

]

}

],

"properties": {

"Node name for S&R": "EmptySD3LatentImage",

"cnr_id": "comfy-core",

"ver": "0.3.48"

},

"widgets_values": [

928,

1664,

1

]

},

{

"id": 128,

"type": "FluxKontextImageScale",

"pos": [

80.96746826171875,

630.9010620117188

],

"size": [

187.75448608398438,

26

],

"flags": {},

"order": 6,

"mode": 0,

"inputs": [

{

"name": "image",

"type": "IMAGE",

"link": 256

}

],

"outputs": [

{

"name": "IMAGE",

"type": "IMAGE",

"links": [

257,

258

]

}

],

"properties": {

"Node name for S&R": "FluxKontextImageScale"

},

"widgets_values": []

},

{

"id": 126,

"type": "LoadImage",

"pos": [

-360.30859375,

628.9431762695312

],

"size": [

413.09088134765625,

406.1212463378906

],

"flags": {},

"order": 3,

"mode": 0,

"inputs": [],

"outputs": [

{

"name": "IMAGE",

"type": "IMAGE",

"links": [

256

]

},

{

"name": "MASK",

"type": "MASK",

"links": null

}

],

"properties": {

"Node name for S&R": "LoadImage"

},

"widgets_values": [

"example.png",

"image"

]

},

{

"id": 131,

"type": "GetImageSize",

"pos": [

366.3339538574219,

629.4735107421875

],

"size": [

140,

124

],

"flags": {},

"order": 8,

"mode": 0,

"inputs": [

{

"name": "image",

"type": "IMAGE",

"link": 258

}

],

"outputs": [

{

"name": "width",

"type": "INT",

"links": [

259

]

},

{

"name": "height",

"type": "INT",

"links": [

260

]

},

{

"name": "batch_size",

"type": "INT",

"links": [

261

]

}

],

"properties": {

"Node name for S&R": "GetImageSize"

},

"widgets_values": []

}

],

"links": [

[

22,

5,

0,

16,

2,

"CONDITIONING"

],

[

112,

21,

0,

66,

0,

"IMAGE"

],

[

115,

16,

0,

21,

0,

"LATENT"

],

[

211,

75,

0,

21,

1,

"VAE"

],

[

246,

68,

0,

122,

0,

"MODEL"

],

[

247,

122,

0,

16,

0,

"MODEL"

],

[

250,

124,

0,

16,

3,

"LATENT"

],

[

253,

127,

0,

16,

1,

"CONDITIONING"

],

[

254,

75,

0,

127,

1,

"VAE"

],

[

256,

126,

0,

128,

0,

"IMAGE"

],

[

257,

128,

0,

127,

2,

"IMAGE"

],

[

258,

128,

0,

131,

0,

"IMAGE"

],

[

259,

131,

0,

124,

0,

"INT"

],

[

260,

131,

1,

124,

1,

"INT"

],

[

261,

131,

2,

124,

2,

"INT"

],

[

262,

121,

0,

5,

0,

"CLIP"

],

[

263,

121,

0,

127,

0,

"CLIP"

]

],

"groups": [],

"config": {},

"extra": {

"ds": {

"scale": 0.6830134553650707,

"offset": [

384.6166376973953,

158.54167400660108

]

},

"frontendVersion": "1.25.9"

},

"version": 0.4

}

Qwen-Image-Editを実行します。作成したワークフローの[画像を読み込む]ノードに入力画像を設定します。

[TextEncodeQwenImageEdit]ノードのプロンプトに以下を入力します。

[実行]ボタンをクリックして画像生成を実行します。VRAMは10GB程度利用されます。

下図の生成結果が表示されます。入力画像に対する後姿の画像が生成できました。